Your A/B Tests Are Lying to You —and Costing You Big $$$

Picture this…

It's Friday afternoon, and Sarah, the marketing director, calls her team into the conference room to celebrate.

After six weeks of rigorous testing and a $50,000 dip into their annual budget, they finally have a winner. The red CTA button beat the blue button by 2.3 percentage points, statistically significant at 95% confidence. High fives all around.

Victory tastes sweet—PASS THE CUPCAKES.

But Here's What Nobody's Saying Out Loud

“We just spent 6 weeks and $50K to learn absolutely nothing that matters.”

While Sarah's team was debating Pantone swatches, their competitor was testing an entirely different value proposition. And guess what? That competitor just left them in the dust.

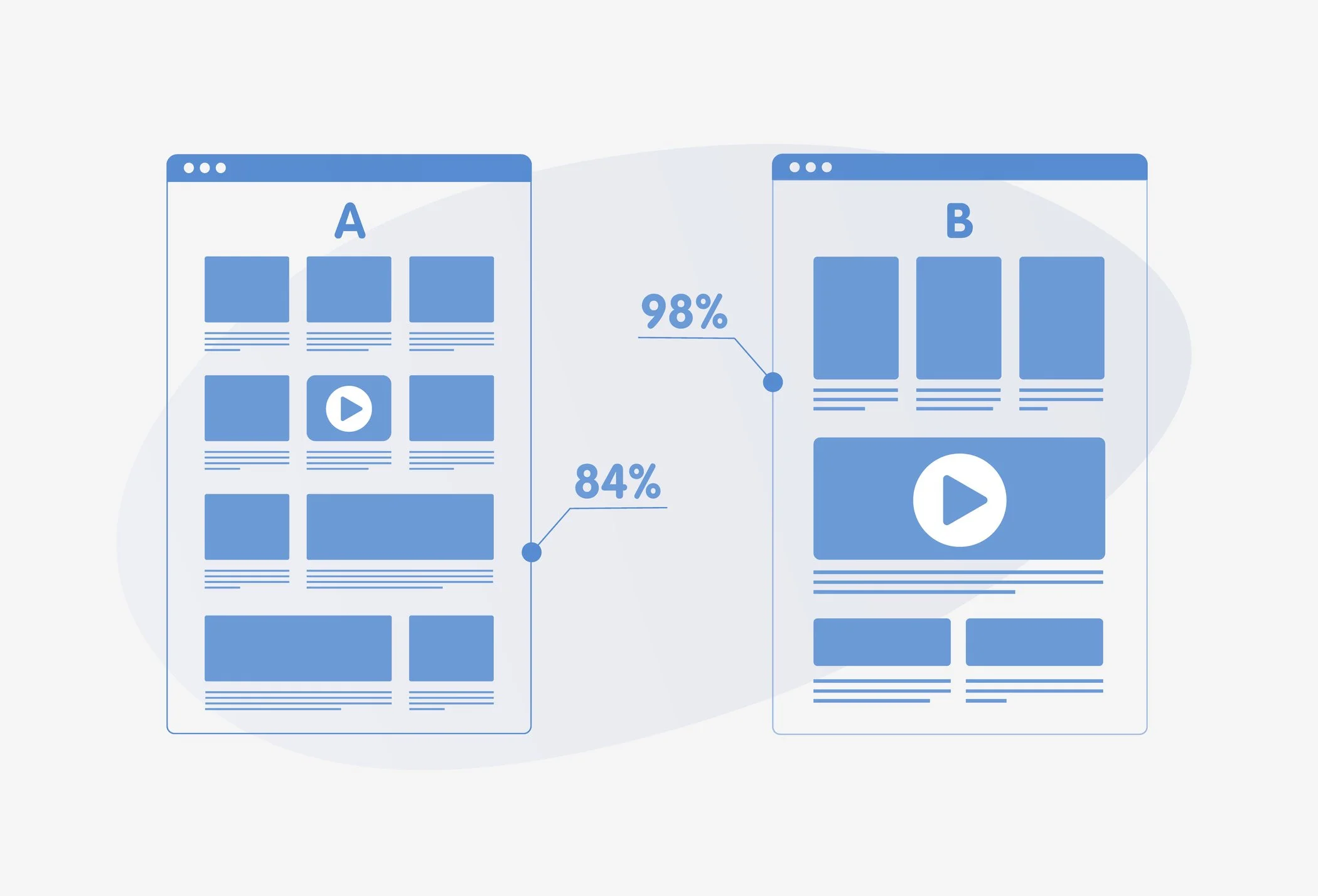

Here's the brutal truth that nobody in our industry wants to admit: most A/B tests are just sophisticated procrastination masquerading as strategy. We've become so obsessed with statistical significance that we've forgotten to ask whether any of this actually matters to our bottom line.

Welcome to Incremental Optimization Hell

Let's be honest about what we're really testing.

We test subject lines.

We test CTA button colors.

We test whether the headline should be in Helvetica or Arial.

We test images, we test whether to use "Buy Now" or "Shop Now," and

We test whether the signature should be blue ink or black ink.

You know what we almost never test? Entirely different offers. Radical creative approaches that make the legal team nervous. New audience segments that "don't fit our customer profile." Controversial positioning that might actually makes the reader feel something. Imagine that???

“…most A/B tests are just sophisticated procrastination masquerading as strategy”

Why? Because small tests feel safe. They're easy to execute, easy to measure, and easy to defend when your boss asks what you've been doing for the past quarter. Nobody ever got fired for testing envelope teasers.

But the math should terrify you: A 5% lift on a mediocre control package is still mediocre. You're just making it slightly less mediocre.

I watched a company spend an entire year testing 47 variations of the same direct mail package. They tested envelope teasers, CTA colors, letter lengths, P.S. placement, and whether the URL should be on the left or right side. After twelve months of diligent testing, their best performer delivered a 3.2% lift over the control.

Then a competitor entered the market with a completely different package format and destroyed them with a 40% improvement. Game over. Wah, wah, wah. Thanks for playing.

When "Statistically Significant" Means Absolutely Nothing

What’s everyone's favorite phrase these days?: statistically significant. It sounds so official, so scientific, so unassailable. Your test reached p<0.05, which means you can confidently declare a winner and move on with your life.

But here's the uncomfortable truth that nobody wants to discuss at the testing strategy meeting: statistical significance and business significance are not the same thing.

Not even close.

Let's run the numbers on a typical "winning" test. You found a statistically significant winner that improved response rates from 2.1% to 2.3%. That's a 9.5% relative lift, which sounds impressive when you present it in the meeting with that bar chart showing the blue bar slightly taller than the red bar.

“We have to ask ourselves… what is the opportunity cost of all this micro-testing?

But the absolute difference is two-tenths of one percent. On a campaign of 100,000 pieces, that's 200 extra responses. At a $50 average order value, you just generated an extra $10,000 in revenue. Meanwhile, the test cost $35,000 to execute properly and delayed your campaign by six weeks while you waited for statistical significance.

Oh, and during those six weeks, your competitor tested a radically different offer and discovered a 60% lift worth half a million dollars. You bunted and got on first base. They swung for the fences and knocked it out of the park.

Congratulations on your statistically significant button color, though. It looks great in the PowerPoint.

The question nobody ever asks in these meetings is this: what's the opportunity cost of all this micro-testing? What more could we have learned instead? What big, transformative insights are we missing while we're obsessing over whether the testimonial should appear above or below the fold?

The Big Swings That Actually Move the Needle

So what should you be testing instead?

Let’s be clear: We’re not talking about testing "Free Shipping" versus "10% Off." We’re talking about the tests that make your CFO nervous and your creative team excited.

Test offers that fundamentally changes how customers engage with your business. Don't test minor variations of the same discount. Test subscription models against one-time purchases. Test "Pay Nothing for 90 Days" against "50% Off Today." Test whether your entire pricing structure is leaving money on the table.

Test audience expansion that everyone tells you won't work. Stop endlessly optimizing for your existing customer profile and start exploring completely different demographic or psychographic segments. I watched a luxury brand test Gen Z audiences against all conventional wisdom and internal resistance. They found a $2 million untapped opportunity that "shouldn't have existed" according to their personas.

Test radical creative divergence that looks nothing like your control package. Long-form storytelling versus short benefits-driven copy. Serious, authoritative tone versus irreverent, humorous voice. I saw a financial services company test their boring, compliance-approved design against a bold magazine-style layout that made everyone uncomfortable. The magazine version won by 34%. Turns out people don't want their investment guidance to look like a stale tax form.

Test channel integration strategies instead of individual channel tactics. Stop testing "email subject line A versus email subject line B" and start testing "email alone versus direct mail plus email sequence versus SMS plus direct mail." The interaction effects between channels often matter more than any single channel optimization.

>> Test subscription models against one-time purchases

>> Test "Pay Nothing for 90 Days" vs, "50% Off Today"

>> Test whether your pricing leaves money on the table

Notice the pattern here?

These tests are legitimately scary because they might fail spectacularly. Yes, they might.

Your safe little button color test might underperform by 2%, but these tests could crater by 30%. But fortune favors the bold. And that's exactly why they're worth running. Because when they win, they don't give you a 3% lift. They transform your entire business.

A New Framework for Testing That Actually Matters

So how do we break free from incremental optimization prison?

Start by flipping your testing philosophy upside down.

Test fewer things, but make them matter.

Instead of running 40 incremental tests per year, run four big-swing tests. Each test should have the potential for at least a 30% improvement. If it can't move the needle that much, it's not worth your time.

Embrace practical significance over statistical significance. Before you design any test, ask yourself: "If this wins, does it fundamentally change our strategy?" If the answer is no, you're testing the wrong thing. A statistically significant nothing is still nothing.

Protect at least 20% of your budget for crazy ideas. You know what I'm talking about—the ideas your team is afraid to suggest in meetings. The creative concepts that make your legal department nervous. The audience segments that everyone "knows" will never work. These are exactly the ideas worth testing because nobody else has the courage to try them.

“Protect 20% of your budget for crazy ideas”

And please, for the love of response rates, start measuring opportunity cost. Every week you spend testing envelope colors is a week you're not testing a breakthrough offer. Every dollar invested in optimizing your mediocre control is a dollar not invested in discovering what could replace it entirely. The cost of a failed test is obvious and easy to measure. The cost of never testing boldly enough is invisible—and vastly more expensive.

The Uncomfortable Truth

Here's what keeps me up at night, and it should keep you up too: your control package isn't losing to competitors with better button colors. It's losing to marketers who are willing to test the things that actually scare them.

While you're celebrating your statistically significant 2% lift, someone else is discovering that everything you thought you knew about your audience is wrong. They're finding new segments you ignored. They're testing offers you deemed "too risky." They're trying creative approaches that would make your legal team break out in hives.

And they're winning. Not every time. They lose sometimes, and lose big. But when they win, they strike gold.

So let me ask you this: what's the boldest test you're afraid to run? What's the idea that keeps getting shot down in planning meetings because it's "too different" or "off brand" or "will never work"?

That's the idea you should explore. That’s the one you should be testing next.

Stop lying to yourself with statistically significant 2% lifts. Start testing things that could double or triple your response rates—or at least teach you something valuable about why they failed.

Because in direct marketing, the most expensive test isn't the one that fails. It's the one you were too afraid to run.

Need Help Getting Started?

If you need help crafting a bold, new winning direct mail campaign — or any other direct marketing effort, let us know. Jacobs & Clevenger can help you use our proven techniques and tactics to help increase the performance of programs you’re already running or to kick off a new one.

J&C has over 40 years of direct marketing experience and would be happy to learn more about your company and your goals.

Contact us today. That way we can give you an honest assessment of how we can work with you to achieve better results.